- #HOW TO INSTALL APACHE SPARK ON WINDOWS HOW TO#

- #HOW TO INSTALL APACHE SPARK ON WINDOWS SOFTWARE#

- #HOW TO INSTALL APACHE SPARK ON WINDOWS DOWNLOAD#

- #HOW TO INSTALL APACHE SPARK ON WINDOWS WINDOWS#

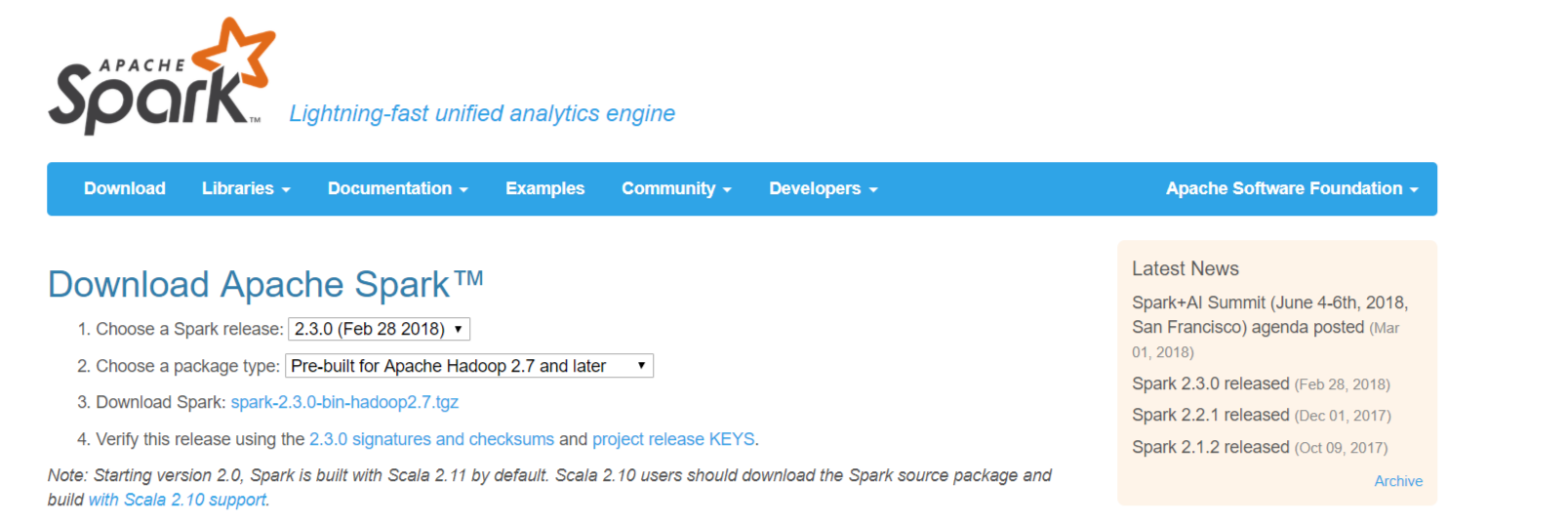

Extract the compressed file in any location you choose and make sure that the path to this location doesn’t contain any spaces. Go to this page and choose the latest stable version pre-built for Hadoop 2.7 and later (see figure bellow).

#HOW TO INSTALL APACHE SPARK ON WINDOWS DOWNLOAD#

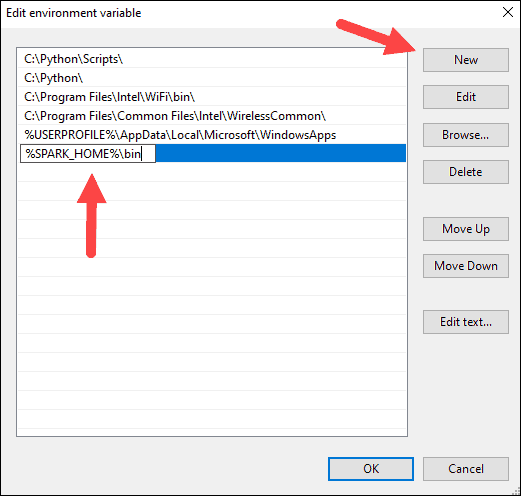

Since it’s not easy to build Spark from sources, we will download a pre-built package that contains all Spark binaries needed to execute it. Then, add %SCALA_HOME %\bin to Path system variable Scala download page Install it and add a new variable to your System Variables named SCALA_HOME which will point to the parent folder of Scala.

#HOW TO INSTALL APACHE SPARK ON WINDOWS WINDOWS#

To check if Java was correctly installed Java versionĭownload Scala windows installer from this page, scroll down to “Other resources” section and download the MSI file for windows (see figure bellow). After you install it, add the JAVA_HOME variable to your System Variables and make sure that it’s path value is pointing to JDK parent folder (see figure bellow for demonstration) JAVA_HOME system variableĪfter you add this variable, it’s time to modify the Path system variable and add a new entry like this: %JAVA_HOME%\bin. This will let Windows command line recognize Java commands Path variable demonstration To do that go to this page and download the latest version of the JDK.

However, in this guide we will install JDK. Note: you don’t need any prior knowledge of the Spark framework to follow this guide.įirst, we need to install Java to execute Spark applications, note that you don’t need to install the JDK if you want just to execute Spark applications and won’t develop new ones using Java.

#HOW TO INSTALL APACHE SPARK ON WINDOWS HOW TO#

– Change access permissions using winutils.exe: winutils.exe chmod 777 \tmp\hive.This guide is for beginners who are trying to install Apache Spark on a Windows machine, I will assume that you have a 64-bit windows version and you already know how to add environment variables on Windows. – Change directory to winutils\bin by executing: cd c\winutils\bin.

– Create tmp directory containing hive subdirectory if it does not already exist as such its path becomes: c:\tmp\hive. The next step is to change access permissions to c:\tmp\hive directory using winutils.exe. HiveContext is a specialized SQLContext to work with Hive in Spark.

#HOW TO INSTALL APACHE SPARK ON WINDOWS SOFTWARE#

Apache Hive is a data warehouse software meant for analyzing and querying large datasets, which are principally stored on Hadoop Files using SQL-like queries. Spark SQL supports Apache Hive using HiveContext. Create a directory winutils with subdirectory bin and copy downloaded winutils.exe into it such that its path becomes: c:\winutils\bin\winutils.exe. This can be fixed by adding a dummy Hadoop installation that tricks Windows to believe that Hadoop is actually installed.ĭownload Hadoop 2.7 winutils.exe. Even if you are not working with Hadoop (or only using Spark for local development), Windows still needs Hadoop to initialize “Hive” context, otherwise Java will throw java.io.IOException. Spark uses Hadoop internally for file system access. To achieve this, open log4j.properties in an editor and replace ‘INFO’ by ‘ERROR’ on line number 19. It is advised to change log level for log4j from ‘INFO’ to ‘ERROR’ to avoid unnecessary console clutter in spark-shell. (If you have pre-installed Python 2.7 version, it may conflict with the new installations by the development environment for python 3).įollow the installation wizard to complete the installation. )ĭownload your system compatible version 2.1.9 for Windows from Enthought Canopy. ( You can also go by installing Python 3 manually and setting up environment variables for your installation if you do not prefer using a development environment. If you are already using one, as long as it is Python 3 or higher development environment, you are covered. Install Python Development EnvironmentĮnthought canopy is one of the Python Development Environments just like Anaconda. – Ensure Python 2.7 is not pre-installed independently if you are using a Python 3 Development Environment. – Apache Spark version 2.4.0 has a reported inherent bug that makes Spark incompatible for Windows as it breaks worker.py. Please ensure that you install JAVA 8 to avoid encountering installation errors. Pointers for smooth installation: – As of writing of this blog, Spark is not compatible with Java version>=9.

In this tutorial, we will set up Spark with Python Development Environment by making use of Spark Python API (PySpark) which exposes the Spark programming model to Python. Spark supports a number of programming languages including Java, Python, Scala, and R.

0 kommentar(er)

0 kommentar(er)